There are many techniques to digitise our environment in 3D. Image-based methods such as photogrammetry, videogrammetry, NeRF and Gaussian Splatting all use imagery captured with different kinds of video cameras to generate highly detailed, photo-realistic 3D models.

There are also instruments that produce 3D models from laser pulses (LiDAR). These systems offer different ranges – short-range (0.5–4 m), medium-range (1–300 m) and long-range (>300 m) – and different accuracies, from microns to a few centimetres. Laser scanners can be static (they must remain still while capturing, typically on a tripod) or dynamic, where the laser can move during 3D acquisition. In addition, these systems may include cameras to colourise the points they capture.

But do we always need to generate a full 3D model to digitise an object or scene? If we needed to digitise a dwelling, we should first consider the intended use of that digitisation. If the goal were to create a floor plan for a robot vacuum to navigate, we would certainly not need precise details of the objects. On the contrary, it would be enough to know only the objects that could interact with the robot. Or if we needed the plan so a builder could see where the walls are and their surface areas, again we should dispense with digitising the rest of the household items such as beds, furniture or appliances.

For this reason, there is no single digitisation technique; rather, the need that motivates digitising a scene should determine the type of digitisation to be performed.

Root-Driven Mapping (RDM)

We can use instruments for massive data capture (such as LiDAR or 360° video systems) to record the environment, but these approaches will generally require a later step of selecting objects until we obtain the exact result we need. At 2freedom, within the MOTIVATEXR project, we aim to take a step forward and bring digitisation to another level:

2freedom: Goal-Oriented Intelligent Digitalisation

At 2freedom we are developing a new digitisation philosophy based on the premise that you should not capture everything – only what is relevant to the end goal.

The process always begins with a precise identification of the digitisation objectives. Before starting capture, we conduct a thorough analysis of the purpose to determine:

- which elements need to be digitised,

- what information must be obtained from each one, and

- the required level of detail and accuracy.

In this way, we decide whether it is necessary to record attributes such as: position, size, orientation, colour, shape, geometry (with greater or lesser detail), textures, or semantic information (for example, identifying that an object is a table or a chair). In many cases – depending on the documentation required – it is not necessary to include all these attributes. The key is to adapt the type and amount of information to the final objective.

Once the documentation requirements are defined, we move to the second phase: selecting sensors and capture technologies capable of providing only the necessary information. Our approach seeks to avoid collecting redundant or irrelevant data from the moment of acquisition, which allows us to:

- drastically reduce data volume,

- minimise processing time and cost, and

- optimise the efficiency and accuracy of the entire workflow.

Intelligent Digitisation with AI

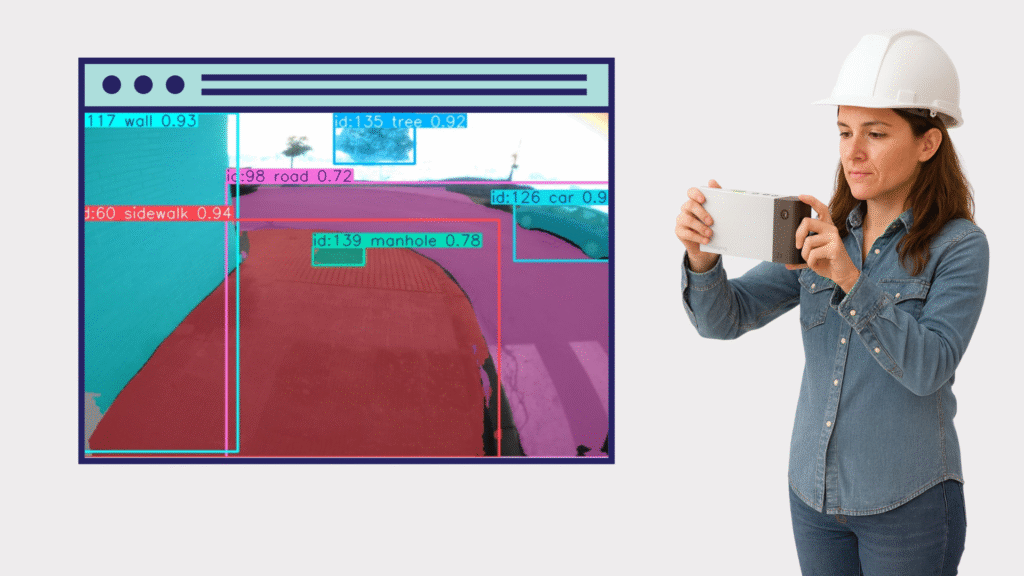

To put this philosophy into practice, at 2freedom we are researching and developing intelligent sensors equipped with Artificial Intelligence, capable of semantic and selective digitisation. These sensors can:

- automatically detect which object they are observing,

- obtain its semantic information (e.g., identify whether it is a table, a chair, or an urban element),

- decide whether it is the object to be measured or not, and

- determine which features should be measured (such as the centre point, centroid, shape or surface).

Thus, the system measures only what is truly needed, avoiding superfluous information. Moreover, because they are image-based sensors, they enable direct user interaction during capture via the screen: the operator can visualise objects in real time, select them actively and validate the information before recording it. The nature of these instruments can even guide the user to the elements that must be measured, optimising data collection and ensuring that the defined objectives are met.

This goal-driven, AI-assisted capture represents a radical transformation of geometric and documentary digitisation. It reduces post-processing complexity, improves operational efficiency, and matches precision and detail exactly to the needs of each project.

AI and Its Integration with the Videogrammetric Process

Videogrammetry can be understood as a chain of three main phases. The first is capture: the operator moves around the scene while the scanner records continuous video or a rapid sequence of images. Each frame is saved with the available sensor data in these devices (timestamp, IMU, GNSS or pose estimates generated by V-SLAM). Because capture is continuous, the system can provide real-time interactive guidance, highlighting objects, suggesting viewpoints, or warning of motion blur.

The second phase is frame selection. Here, an algorithm designed by 2freedom analyses the video and chooses an optimal subset of images for subsequent processing. This pre-selection-based on criteria such as sufficient overlap, diversity of angles (parallax), sharpness and exposure – reduces redundancy and improves both the precision and efficiency of the reconstruction.

The third phase is alignment and reconstruction. The selected frames are aligned by estimating camera poses, which are then refined by a non-linear optimisation process known as bundle adjustment. From there, the system can reconstruct 3D geometry – progressing from depth maps to a fused point cloud and finally to a mesh – or, in cases where a full model is not required, focus on triangulating specific elements such as centroids, endpoints or sampled contours.

Throughout this pipeline, automatic AI-based segmentation plays a key role. During capture, segmentation models process frames in real time to identify candidate objects and display them to the operator, guiding acquisition towards better angles or complementary views. Later, during reconstruction and measurement, the resulting masks delimit the pixels corresponding to each object. These masks are the basis for extracting centroids, contours or surfaces, or for driving multi-view reconstructions centred on a specific instance.

From these segmentations, the system can produce different measurement modalities. For point elements – such as the centre of a bolt or the top of a lamp post – a sub-pixel centroid is computed from the mask and triangulated across multiple views to obtain the 3D point. For linear elements – such as a kerb, a pole or a tree-trunk axis—2D lines are extracted from the masks, keypoints are sampled and a 3D polyline is reconstructed, with the option to fit parametric models. Finally, for full surfaces or volumes, multi-view stereo techniques guided by the masks generate depth maps, fuse them into a point cloud and optionally produce a closed, textured mesh. The major benefit is that computation focuses solely on segmented objects, avoiding noise and irrelevant geometry from the surroundings.

The backbone of the process relies on triangulation and optimisation. First comes initialisation—either via feature matching or with the help of sensors such as IMU, GNSS or V-SLAM. Observations are then triangulated using algebraic methods like DLT or least squares. Finally, everything is refined with bundle adjustment, minimising reprojection errors and using robust loss functions to discard segmentation errors or incorrect correspondences.

In practice, achieving good results requires precise camera calibration (intrinsic parameters and distortion), and the ability to integrate IMU or V-SLAM data to improve frame selection and initial alignment. It is also crucial to have georeferencing options such as RTK GNSS, coded targets or surveyed control points to place the model in metric coordinates.

Regarding segmentation quality, we use AI models trained at 2freedom on the target categories. The operator will be able to accept or reject segmentations in real time through simple controls, supported by visual overlays showing detected masks, recommended viewpoints and a capture-quality score for each object.

All of this means that videogrammetry with automatic segmentation not only enables highly precise digitisation of objects and scenes, but also provides a far more efficient, guided workflow tailored to the specific needs of each project.

The Videogrammetric Camera in MOTIVATE XR

At 2Freedom we have developed 2fImaging, an advanced videogrammetric scanner capable of identifying and segmenting trained categories using artificial intelligence. This portable scanner is designed to integrate seamlessly with GPS, GNSS or RTK, and incorporates a GPU-based processing system and an internal IMU, allowing it to operate even where there is no GPS signal or line of sight to total stations.

2fImaging enables:

- High-precision measurement of individual points.

- Capture and reconstruction of three-dimensional models.

- Detection and classification of predefined semantic categories, thanks to its automatic segmentation system.

Using our videogrammetric systems within the MOTIVATE XR project is both an exciting challenge and a significant innovation opportunity. We are confident this integration will deliver substantial benefits to industry, enhancing precision, efficiency and the capacity for spatial data analysis across professional applications.

Authors

2Freedom Imaging

Pedro Ortiz-Coder, PhD in Geotechnologies, is co-founder, CEO and CTO of 2Freedom Imaging Software & Hardware S.L. An expert in videogrammetry, Visual SLAM and intelligent 3D digitisation, he leads the development of innovative hardware and software for surveying, construction and heritage. He combines academic and industrial experience, driving disruptive solutions in spatial data capture and analysis.

2Freedom Imaging

Jesús Romero Porras, Graduate in Computer Engineering with a specialisation in Software Engineering, he focuses on data processing for the generation of 3D models at 2Freedom Imaging Software & Hardware S.L. He has experience in programming, algorithms, and workflows for handling spatial information, transforming complex data into accurate digital representations.